Abstract

Large Multimodal Models (LMMs), characterized by diverse modalities, architectures, and capabilities, are rapidly emerging. In this paper, we aim to address three key questions: (1) Can we unveil the uncertainty of LMMs in a model-agnostic manner, regardless of the modalities, architectures, and capabilities involved? (2) Complexity of multimodal prompts leads to confusion and uncertainty in LMMs, how can we model this relationship? (3) LMM uncertainty ultimately reflects in multimodal responses, how can we mine uncertainty from these multimodal responses? To answer these questions, we propose Uncertainty-o: (1) Uncertainty-o is one model-agnostic framework for unveiling uncertainty in LMMs, independent of their modalities, architectures, and capabilities. (2) We empirically explore multimodal prompt perturbation for unveiling LMM uncertainty, providing our insights and findings. (3) We propose multimodal semantic uncertainty, enabling quantification of uncertainty from multimodal responses. Experiments with 18 benchmarks across various modalities and 10 LMMs (open- and closed-source) validate the efficacy of Uncertainty-o in reliably estimating LMM uncertainty, thereby facilitating various downstream tasks, including hallucination detection, hallucination mitigation, and uncertainty-aware Chain-of-Thought (CoT).

Overview

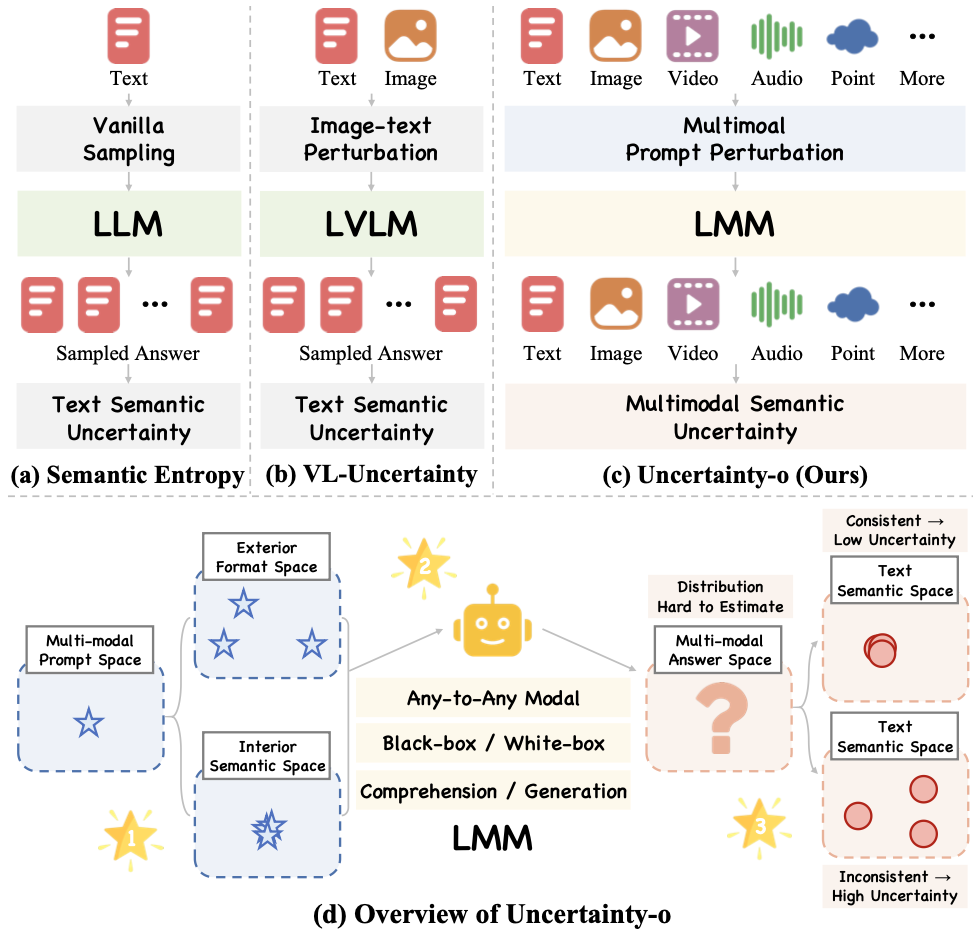

Comparison with Previous Works and Overview of Uncertainty-o. Uncertainty-o captures uncertainty in large multimodal models in a model-agnostic manner. It achieves reliable uncertainty estimation via multimodal prompt perturbation. Harnessing multimodal semantic uncertainty, which maps multimodal answer into text semantic space, we enable mining uncertainty from multimodal responses.

Method

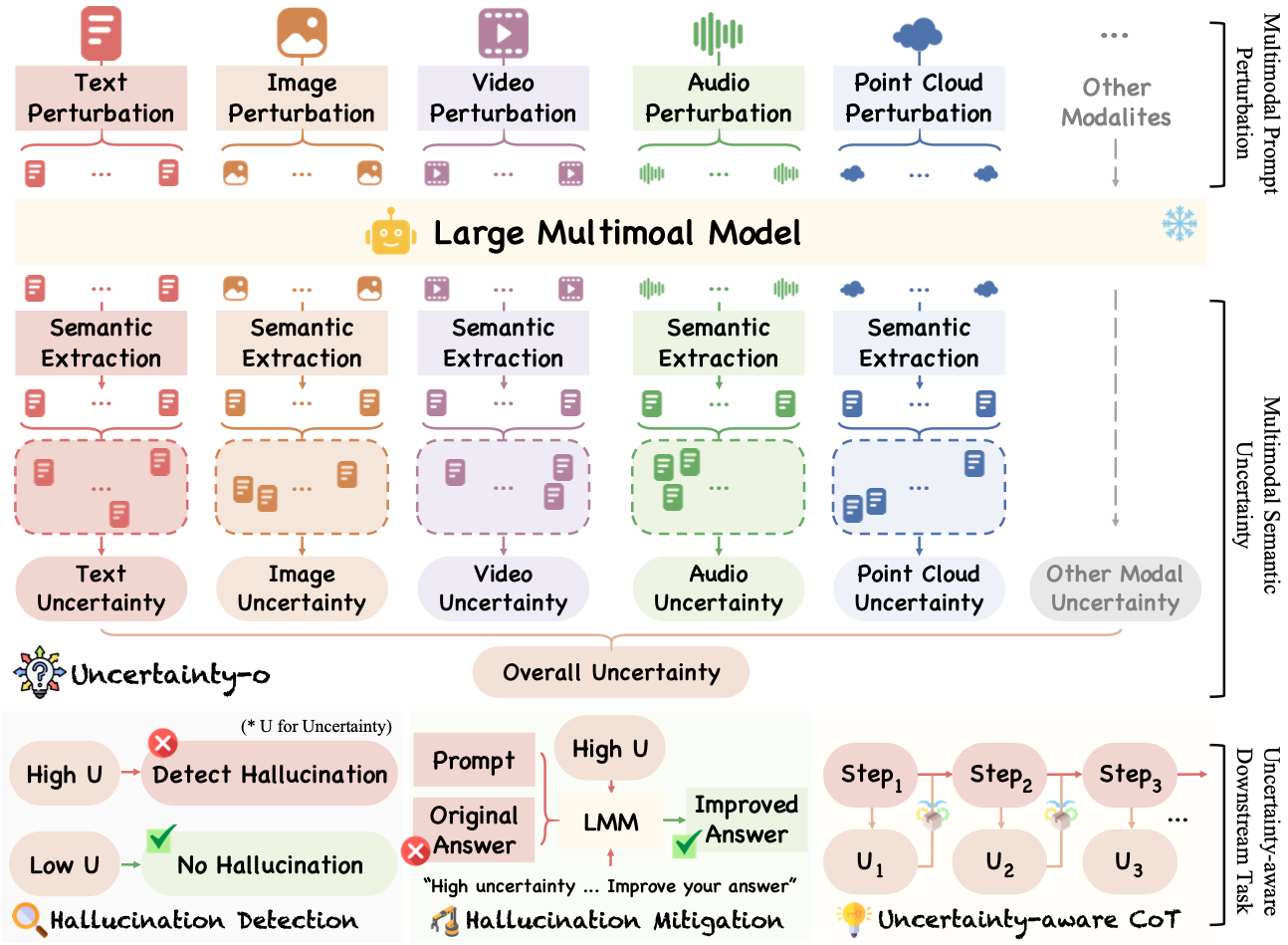

Pipeline of Our Uncertainty-o. Given a multimodal prompt and large multimodal models, we perform multimodal prompt perturbation to generate diverse responses. Due to the inherent epistemic uncertainty of these models under perturbation, varied responses are typically obtained. To quantify this uncertainty, we apply semantic clustering on the collected responses and compute their entropy. Specifically, responses are grouped into semantically similar clusters, and the entropy across these clusters is calculated as the final uncertainty measure. Higher entropy indicates greater variability in responses, suggesting lower confidence, while lower entropy reflects higher consistency and thus higher confidence.

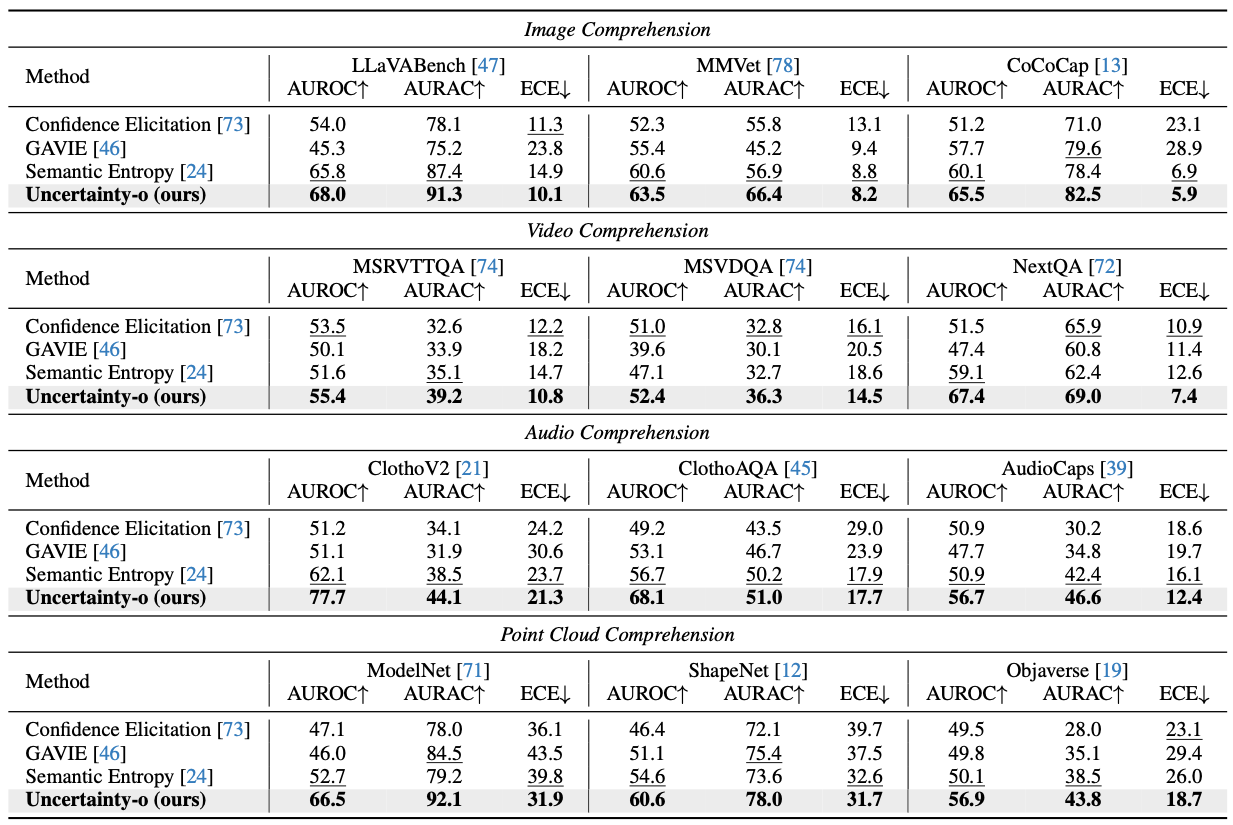

Comprehension Hallucination Detection Results

Comprehension Hallucination Detection Results. Uncertainty-o consistently outperforms strong baselines by a clear margin in LMM comprehension hallucination detection. For LMMs, We utilize InternVL, VideoLLaMA, OneLLM, PointLLM for image, video, audio, point cloud comprehension. The best results are in bold, and the second-best results are underlined.

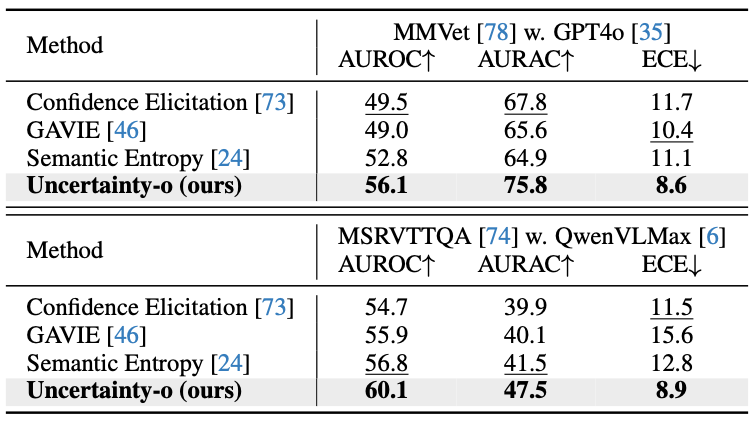

Hallucination Detection for Closed-Source LMMs

Hallucination Detection for Closed-Source LMMs. We leverage GPT4o for image comprehension, and Qwen-VL-Max for video question answering, respectively.

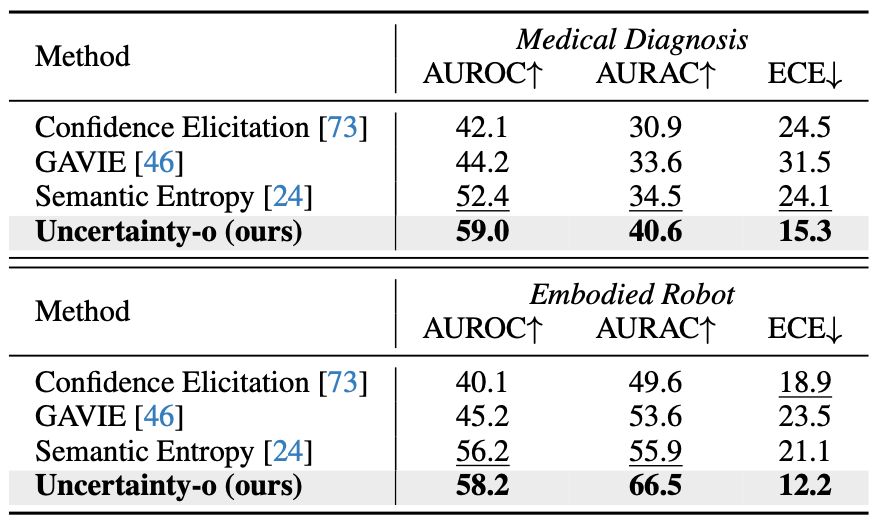

Hallucination Detection for Safety-Critic Tasks

Hallucination Detection for Safety-Critic Tasks. We harness MIMICCXR benchmark for medical image diagnosis, and OpenEQA benchmark for video embodied QA.

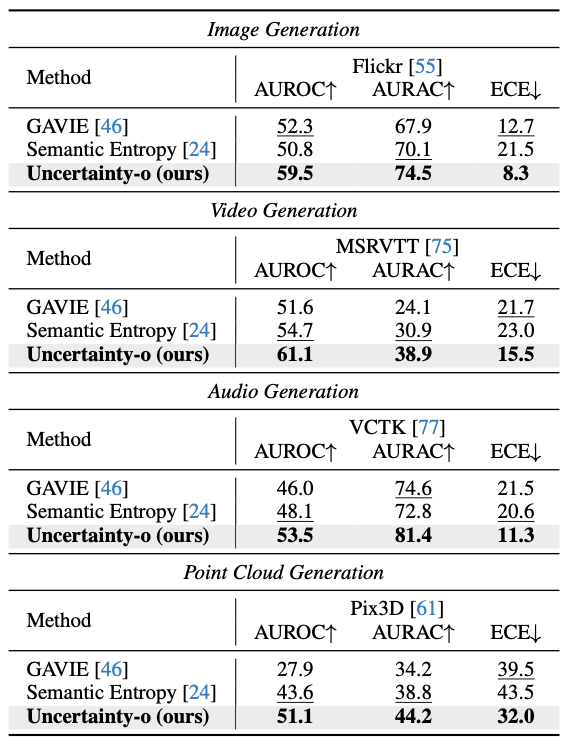

Generation Hallucination Detection Results

Generation Hallucination Detection Results. We utilize StableDiffusion, VideoFusion, AnyGPT, RGB2point for image, video, audio, point cloud generation.

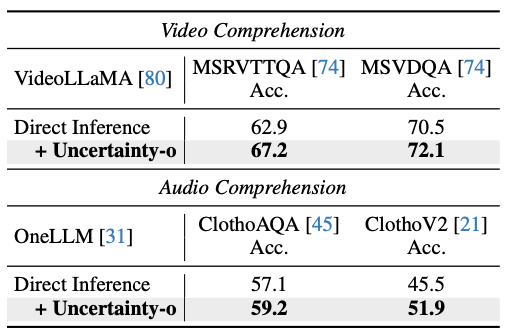

Hallucination Mitigation Results

Hallucination Mitigation Results. Uncertainty-guided revision effectively mitigates hallucination.

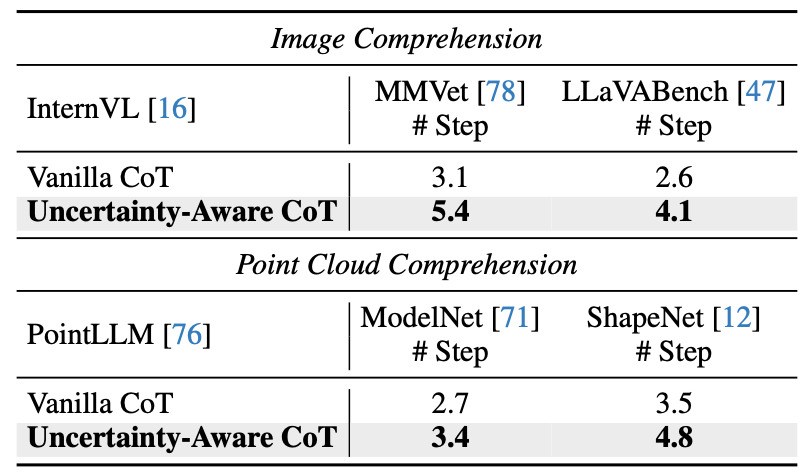

Uncertainty-Aware Chain-of-Thought Results

Uncertainty-Aware Chain-of-Thought Results. Our estimated uncertainty enrich reasoning context, facilitating more thorough thinking process. We report average reasoning steps.

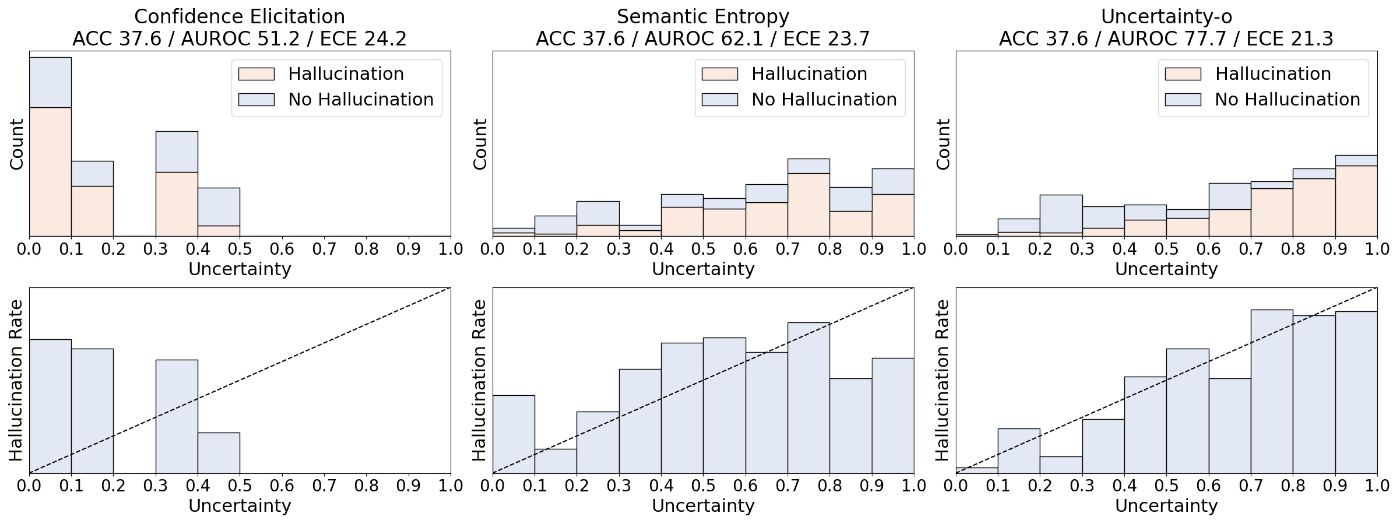

Reliability of Our Estimated Uncertainty

Reliability of Our Estimated Uncertainty. From comparison with previous methods, we observe that: (1) Compared to Confidence Elicitation, Uncertainty-o effectively avoids the over-confidence problem, which inaccurately assigns low uncertainty (or high confidence) to hallucinatory responses. (2) Compared to Semantic Entropy, Uncertainty-o provides more reliable uncertainty estimation, e.g., the uncertainty is more closely aligned with the error rate in each bin. Results from OneLLM on ClothoV2.

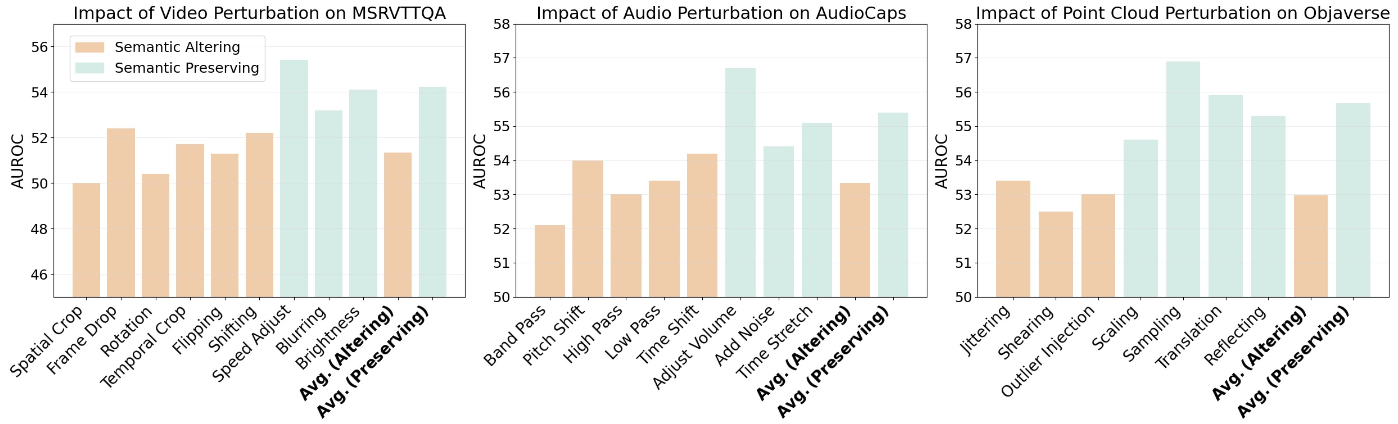

Empirical Comparison of Prompt Perturbations

Empirical Comparison of Different Prompt Perturbations. On average, semantic-preserving perturbations are more effective for eliciting LMM uncertainty than semantic-altering ones. Results from comprehension hallucination detection for video, audio, point.

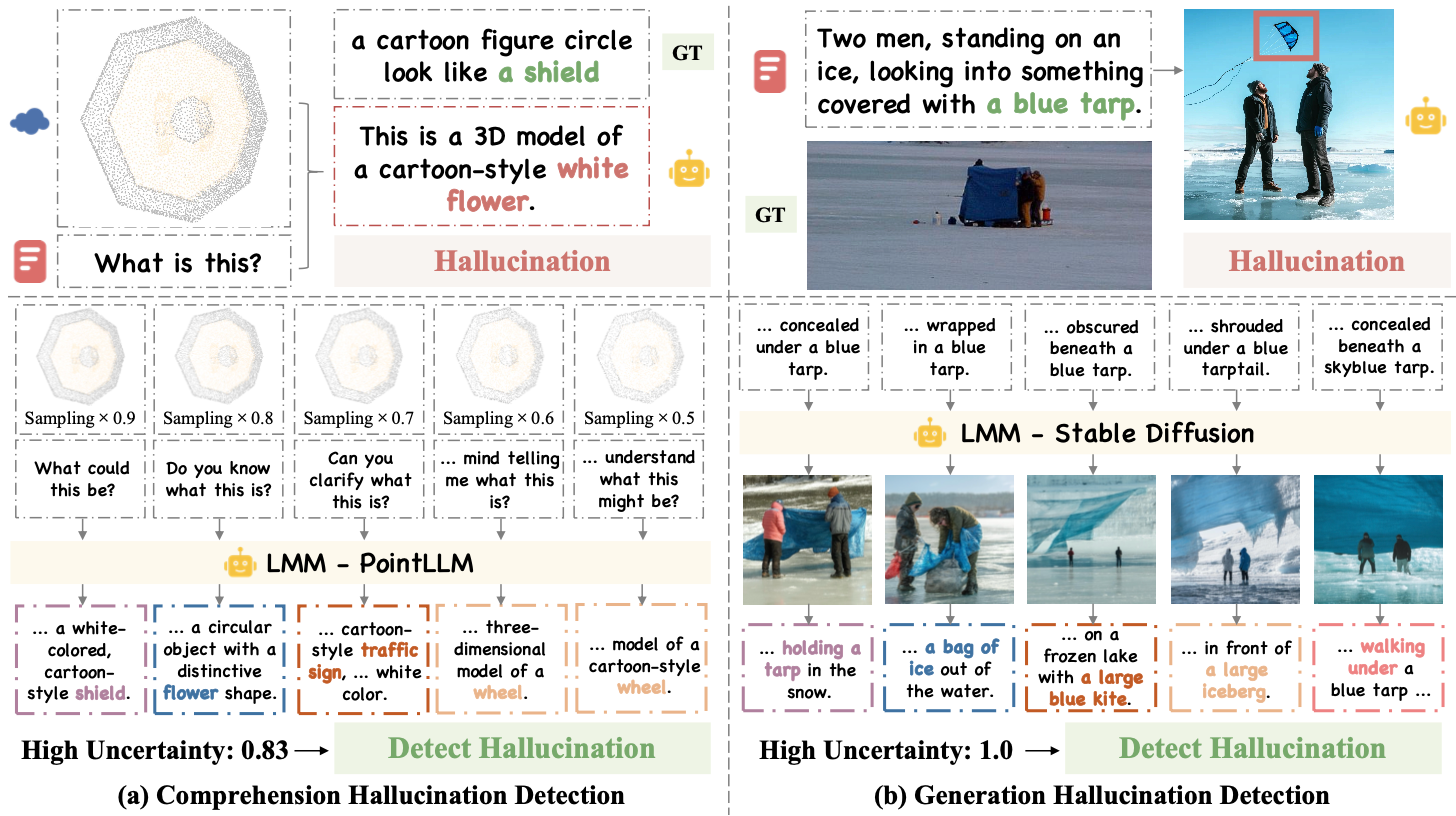

Qualitative Results

Qualitative Results of Successful Hallucination Detection. Uncertainty-o is proficient at accurately detecting both compre- hension and generation hallucinations due to the reliablely estimated uncertainty. Cases from Objaverse and Flickr.